��Ϊ��һ�ֿƼ�������ҵ���Ľ����������������������ܻ����ѳ�Ϊδ����ҵ���������������Ϊ�������˹�����������Ѹ�͵�����֮һ�����������ѧϰ̫ͨ���������ȡ��������������Ϣ��������������ͼ�����������������˽����ֳɵ�Ӧ��֮����������ҲΪͳ��ѧ���۵��о����췭�����µ��������2020��11��19����������������ΰ�ײ�����ͳ���뾭�ü���ϵ�����ġ����ѧϰ��ͳ��ѧ���ۡ����л��ڱ���ΰ�ײ��ֳɾ���������Ժ�������ԺУ����λ����ͳ��ѧ��Ӧ���������µ�����Ч�������˷�����̽����������Ϊ���ϡ�������ϼ������500λ��Уʦ����ҵ����ʿ������һ�����ѵ�ͷ��ѧ��ʢ�����

����ֱ��

��Ļʽ

������ ������ ΰ�ײ�����ͳ���뾭�ü���ϵ���ڡ�ϵ����

�ۻ���ΰ�ײ�����ͳ���뾭�ü���ϵ�������������ڵ�������������Ļ����������������Ǵ���ѧԺ�´����������ؽӴ������������ϵ�ʦ��ѧ���Ǽ��뱾����������������ע���˹����ܵķ��������������������ѧϰ��Ϊ���óͷ��ǽṹ�����ݵ�һ���ֶ���������������ģ��ҵ��Ӧ���ѳ�Ϊ�����ߵ�����ҵ��ʶ��һ��ƫ�����Ϊ�����������������ѧϰƽ̨������ҵӦ��������������֧�ֹ�ҵ���ܻ���������Ҳ�Ѿ���ΪĿ��ѧ�������ҵ�����ֿ��ȵ��о�Ӧ��ƫ�����������ڴ������ܹ���������������������������ջ����

�´Ǽα� ������ ΰ�ײ���ί���

��ּ����

����Ԫ�ء����ͻ�еѧϰ��ʵ��

�������⣺Prediction, Computation, and Representation �� The Nature of Machine Learning

�����ˣ� ��־����������������ѧ��ѧ��ѧѧԺ

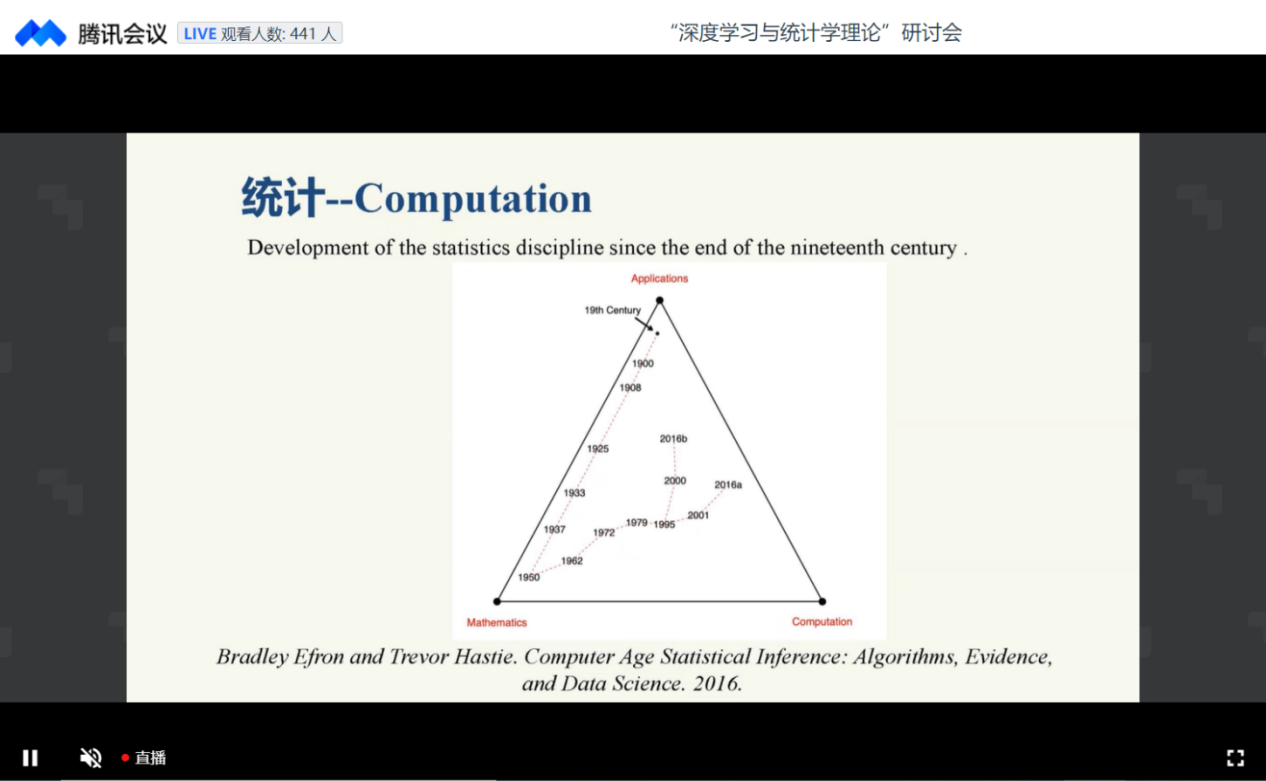

��־�������ڱ����жԻ�еѧϰ��ͳ��ѧ��Ӱ����������˼�Ҫ�����������������Ȼ�������λ����ͳ��ѧ��Leo Breiman ��Bradley Efron�����ڸ������ġ�Statistical Modeling: The Two Cultures���롰Prediction, Estimation, and Attribution���ж�ͳ��ѧ�ͻ�еѧϰ֮�佨ģ�������������������˵������еѧϰ��������ͳ��ѧ���������Ӱ������ܡ����ѧϰ���롰ͳ��ѧ�������ֽ�ģ�Ļ�������۵��������������Ž��������������еѧϰ����Ҫ�أ�Prediction, Computation��Representation�����PredictionΪ����Ŀ������������Computation��Ϊ��������;�����������ӡ�Representation���Ƕ���ڹ�ͻ�еѧϰ����Ž�����ע����������Computation��������������еѧϰ��Ҫ��ע�������������������ɢ��������������������ѵ���������Ż����������������еѧϰ����ע��������ڲ��Լ��ϵķ�����������������ʵ���Ż��㷨�ͷ������۵��л�ͳһ�����Representation����������ģ��������ȡ�����������������ᴮ�����������Dimensionality Curse����ʹ�á�Dimensionality Blessing�������������ѧϰ������ڹ����������֮���Ȩ�������־��������������������Ҳ������Ϊֹ�ѡ�Data Modeling Culture���͡�Algorithmic Modeling Culture����Ϊһ����������;�����

���ɭ�֡������ˡ��Dz����ѧϰ�ġ����š�

�������⣺�Dz������ѧϰ���۳�̽

�����ˣ� ��ξ���������Ͼ���ѧ�˹�����ѧԺ

��ξ���ڵĿ���������������ڷDz����ѧϰ���о�������������������ǷDz������������ֵ����ɭ��ģ�������������Dz����ѧϰ�����ʹ����ȡ���������ѧϰ�൱��Ч�����������������ɢ��ѧϰʹ���������ֳ����õ�Ч������߽��ڵı���Χ���������Ŀ������ڷDz����ѧϰ����ȡ�õ�������Դϣ����������������������������о�����������ڹ������������������ָ���Dz����ģ�ӵĹ�������߽����ԡ�Deep Forests��Ϊ������������������ڵ����ѧϰ�������Ű�������������̫ͨ����������������ɵ�ѵ��������������������߽������ֿ�ʹ��һ�����ļ������Relu��������������BP�㷨����ѵ�������Ű��еѧϰҪ����������������ѧϰ����Ҫ�˹�������루��ͼ��������������ͨ���㷨�Զ�ѧϰ��������ڴ����������߽���ָ�������������ڵ�������ѧϰЧ��������������ҪԴ��3��Ե��ԭ�ɣ�1���������ݴ��óͷ�����2���������ڲ��任���3���㹻ǿ��ģ����Ư�������ͬʱ��������������ѧϰҲ�����������⣺1������������2������ѵ�����3�����㿪�������������ʵӦ���վ�ѧ���о��IJ������������������ó��о�������������ѧϰҪ�����������ɴ˶�����ˡ�Deep Forests���Ŀ��������Deep Forests��ʹ���ˡ�Random Forest�����������ܹ�ʵ����㴦�óͷ�������������µ������������ʵ�����ְ���������������ģ�ӵ������������ع��DNN���Ϊ�˽�һ��֤ʵ������Խ�����������߽��ڸ�����Deep Forest�������������������������֪���ض�������ģ���������������������µ�forests��һ����֤ʵ����������֤ʵ�������������ѧϰ�Ľ�ģ�����ṩ�˺ܺõ�ָ��ƫ�����

���������뷢����̽�����ѧϰ����������

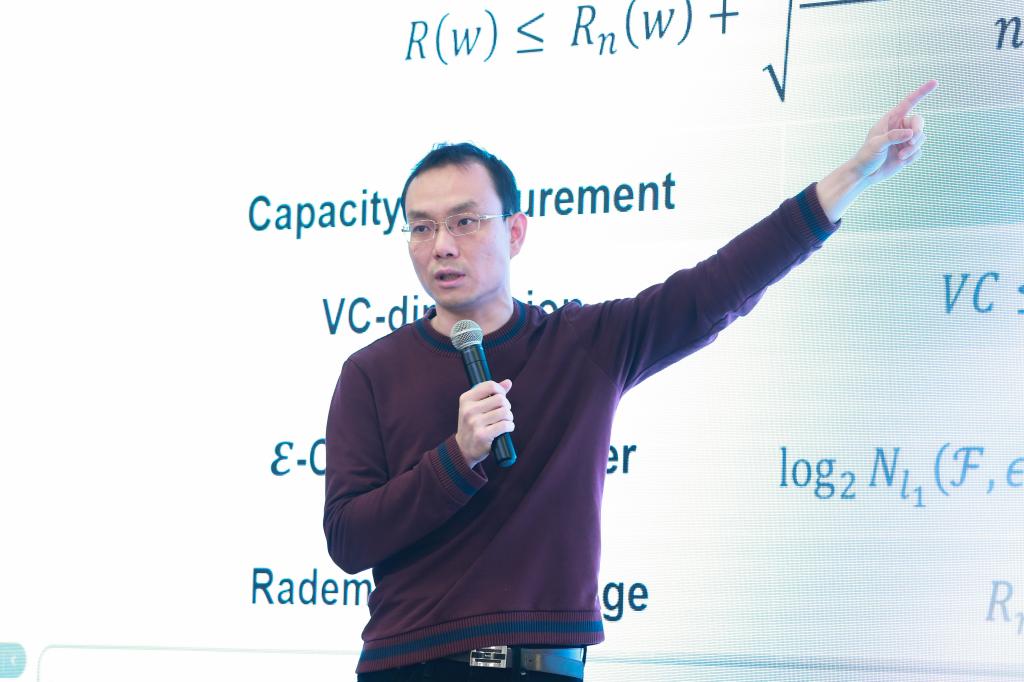

�������⣺Deep learning: from theory to algorithm

�����ˣ� ��������������������ѧ��Ϣ��ѧ����ѧԺ

�����������ڱ������ص����������Ŷӽ��������ѧϰ���۷�����о�Ч��������㷨��Ƶ�ָ���������Ϊ��Ҫ���Դ����������о����ѧϰ���������ӣ�ģ�ӵ������������ڲ��Լ��ϵķ��������Լ���ѵ�����ϵ��Ż��������������������������������о��������������ڼ����Ŷ�֤ʵ������������Ͽ��������ά���Լ���ȿ��������������������������������������һ��Universal Approximator���������������⾫���Ƚ�һ���ɲ⺯��������������ѧϰ�ķ����������о�����������������������������Ȼ�����������һ������������ģ��������������Ȼ���ֳ���ǿ�ķ�����������������˾����ͳ��ѧϰ���ۿ��ܲ���������������ڻ��ִ�ģ����Ư���ѷ�ç㷨�ĽǶ�ڹ�������ѧϰ�ķ�������������������������ʹ��SGLD�㷨�������������������ѧϰ�ķ�������Ͻ����������������������ѧϰ���Ż��㷨���о���������������֤ʵ������������ֿ��Լ��������ʼ���Ļ����DZ�ȫ����Ƶ����������������������ʼ�������������ʹ�ã�������ݶ��½��������Ժܴ�ĸ����ҵ�ȫ�����ŵ��������������ִܵ�ָ��������������ڴ�����Ч���������������������Ŷ�����˶����Ż��㷨����Gram-Gauss-Newton�㷨������������ѵ�����������������㷨���ж�����������������������ÿ�ε�����������Ư����SGD������

����AI�˳���Ļ�����桪����Ⱦ���������

�������⣺Progressive Principle Component Analysis for Compressing Deep Convolutional Neural Networks

�����ˣ��ܾ����������й������ѧͳ��ѧԺ

�ܾ����������о���ͷ��������������������Ϊ���ѧϰ��������ľ������������Ų���������������������size��С��������������Ѹ���������������Ӷ�����Ȩ�ؾ���w��ά�ȼ��ߵ���������ͬʱ����Computation��Storage��������������Ҳ����ֱ�Ӱ������ƶ�����������ڴ����������ܽ��������һ�ֽ�������������(PPCA)Ҫ��Ծ������н�ά��ѹ����Ⱦ��������������ϸ��������������һ��Ԥ��ָ���IJ����������������ƶ�������������������ÿ��Ŀ�IJ���������PPCA��ÿһ�εľ�����reshape��һ���������������ѡ���ۼƷ���Т˳����ߵļ���������������PCA��ά���������⽫������̭Ŀ����е��ں���Ŀ�����ά����������Ŀ����shape�����ı���������Ӱ������һ����������������Ҫ�ȶ���һ���shape�����������پ���PCA��ά������������Ŀ�����ʹ�õ��ں���Ŀ��������һ���ͨ����Ŀ��������������һ���ͨ��Ҳ�����̭������������ģ�ӽṹ���Ա����ѹ������������������Ŀ��������Ǯ�����Դ����������ܽ��ڽ����֮Ϊ��Progressive Principle Component Analysis������ܽ��ڵ��о�����һЩ�����CNNs (AlexNet, VGGNet, ResNet��MobileNet)�ͻ����ݼ��������˸�Ҫ������������ʵ����ע����������ijЩ�ض�ģ������������PPCA��ģ��ѹ���ʴ�չ�����ʿ������������Ҿ���û��̫����ʧ�����PPCA�����������е�ģ���ж���������ľ��������������ܽ���ָ��������������PPCAû��˼������ѡȡ���ŵĵ���������������������н�һ�����о��ռ����

����Ϣ����Ϊ�����ĵ��Ĵι�ҵ�������ƶ������������˹�����ʱ������������ͬȫ�����ι�ҵת�������������������ڳ�������Ҫ�ص���̬�ݽ������������ѧϰ�ǽ����������˹������������������ߵ�����֮һ����������ͳ��ѧ���Ž�����ײ�Ʊػ�����µĻ�����������л��ͳ��ѧ�������ѧϰ���Ž��о�������������������������ͬʱҲ�������������ר��ѧ����֮��Ľ�����̽����������Ϊ����ͳ�����ݿ�ѧ���콨���������ƽ̨�����������ʦ����ѧ�߶����ֻ����dz���

��ΰ�ײ�����ͳ���뾭�ü���ϵ��

ΰ�ײ�����ͳ���뾭�ü���ϵ���б�����ѧ������˼�����ɰٴ�����ѧ����������������ΰ�ײ�����ѧԺ����������֪ʶ�������������̽��������������ƶ����ǰ��������ʷʹ�����������ԡ�ΰ�ײ�ͷ������Ϊê���������۽�һϵ������ͳ�������ش���������о�̽�����������������Ƹ��˹�������ͳ��ѧ���۵Ľ������������ֵѧԺ����35����֮������������ΰ�ײ�ѧ��֮�ǻ�������������Ƚ���֮ƽ̨��������̫ͨ��������ѧ���о�Ч��������������ѧ���������������ƶ����ǰ�����